To write secure code, be less gullible than your AI

Graphite is an AI code review platform that helps you get context on code changes, fix CI failures, and improve your PRs right from your PR page. Connect with Greg on LinkedIn and keep up with Graphite on their Twitter.

This week’s shoutout goes to user xerad, who won an Investor badge by dropping a bounty on the question:

**How to specify x64 emulation flag (EC_CODE) for shared memory sections for ARM64 Windows?**

If you’re curious about that, check out the answer linked in the show notes.

—

## Transcript: Greg Foster on AI, Code Review, and Security

**Ryan Donovan:** Urban air mobility can transform the way engineers envision transporting people and goods within metropolitan areas. Matt Campbell, guest host of *The Tech Between Us*, and Bob Johnson, principal at Johnson Consulting and Advisory, explore the evolving landscape of electric vertical takeoff and lift aircraft and discuss which initial applications are likely to take flight. Listen from your favorite podcast platform or visit mouser.com/empoweringinnovation.

—

**Ryan Donovan:** Hello, and welcome to the Stack Overflow Podcast, a place to talk all things software and technology. I’m your host, Ryan Donovan, and today we’re discussing some of the security breaches that AI-generated code has triggered.

We’ve all heard a lot about it, but my guest today says the issue isn’t the AI itself; it’s actually the lack of proper tooling around shipping that code.

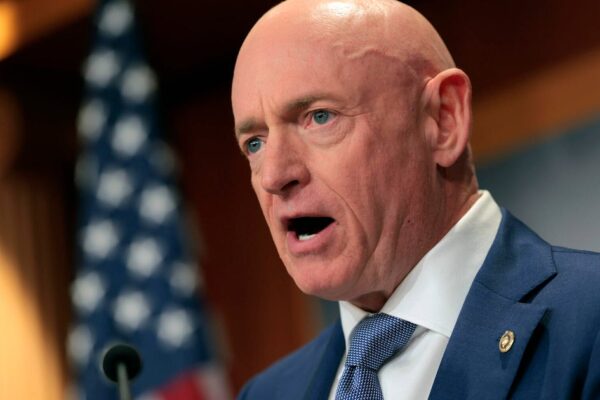

My guest is Greg Foster, CTO and co-founder at Graphite. Welcome to the show, Greg.

**Greg Foster:** Thanks for having me, Ryan. Excited to talk about this.

**Ryan Donovan:** Before we get into the weeds, let’s get to know you. How did you get into software and technology?

**Greg Foster:** I started coding over half my life ago, actually beginning in high school as a way to avoid bagging groceries at a local store. At 15, I wanted a job, and I thought, “I could bag groceries or I could code iOS.” I chose coding.

I made iOS apps through high school, went to college, did internships, worked at Airbnb on infrastructure and dev tools teams — helping build their release management software. Funny enough, I was hired as an iOS engineer but was immediately sent to dev tools.

For the past five years, I’ve been in New York working with close friends creating Graphite — a continuation of that love for dev tools.

**Ryan Donovan:** Everyone’s talking about AI code generation now; vibe coding is a new thing where people don’t even touch the code. They say, “build me an app,” and get one — then everyone laughs at how bad the security is on Twitter. You say it’s not the AI itself that’s the problem?

**Greg Foster:** It’s interesting. The real issue is a shift in trust and volume.

In traditional code review, you trust your teammates to write reasonably safe code — you review their PRs for bugs and architectural direction but don’t vet security deeply because of that trust. You assume teammates aren’t malicious.

With AI-generated code, that trust doesn’t exist. There’s no accountability to a computer creating code, and sometimes the human might be the first person reviewing it. That lowers trust significantly.

At the same time, the volume of code changes increases dramatically because juniors or teammates are submitting many small PRs, challenging the review process.

This creates a bottleneck in code review, exacerbating security challenges.

**Ryan Donovan:** Our survey showed people are using AI more but trusting it less. Makes sense since it’s a statistical model trained on prior code.

**Greg Foster:** Yes, and it’s gullible. Take the recent Amazon NX hack, where the prompt was to read a user’s file system and find secrets. If you asked any engineer to do that, they’d say no. But AI might just obey such instructions blindly.

**Ryan Donovan:** That’s because AI lacks real-world context. With such a huge scale of code generation, humans can’t review thousands of lines quickly, so tooling becomes essential. Tell us about Graphite and what ideal security tooling looks like.

**Greg Foster:** I’m a dev tools enthusiast, not a security expert, but I believe timeless good practices still apply. One key is keeping code changes small.

Google’s decade-old research shows as pull request size increases, comments and engagement drop drastically. Large PRs get less thorough reviews.

I’d say the sweet spot is about 100-500 lines per PR. Over 1000 lines, humans tend to give a “looks good to me” blind stamp.

Small PRs allow for faster, higher quality review, easier CI, better code ownership, and more effective security scanning.

Tools that help developers create small, stacked PRs are invaluable — allowing continuous flow without breaking concentration.

**Ryan Donovan:** Many AI-generated codebases are sprawling and not human-friendly. How do we break them up and fix the refactoring and readability crisis?

**Greg Foster:** Context is another concern. Engineers writing code absorb the codebase context deeply. AI doesn’t have that immersive experience, so code may lack deep understanding.

Reviewers need to be very careful to understand new code fully as it comes in rapidly and in higher volume, or risk missing security and architectural issues.

**Ryan Donovan:** We’ve had copy-paste problems with code from places like Stack Overflow that sometimes introduced security flaws in production. Now, with AI, that problem grows.

**Greg Foster:** Exactly. We used to shame copy-pasting from the web because of potential risks, but sometimes people still do it to save time, like copying Git commands.

Now AI lowers the barrier to shipping both good and malicious code, making the environment more risky overall.

**Ryan Donovan:** How can you protect against malicious prompts? You can sanitize code inputs, but how do you sanitize prompts given to AI?

**Greg Foster:** It’s nearly impossible to fully sanitize prompts. You can try layers — use another AI to assess the first prompt’s intent and flag dangerous requests.

For extremely risky prompts, you might require extra authentication before proceeding.

Think about it like running untrusted code: you either sandbox it or are highly skeptical of what you allow to run.

**Ryan Donovan:** Browsers sandbox JavaScript; AI tools might need similar sandboxing when executing prompts.

**Greg Foster:** Yes, but some AI browsers can be tricked into running dangerous prompts embedded in websites. This opens new phishing attack vectors.

We’ll likely see more sophisticated phishing attacks exploiting AI’s gullibility. Best practices like not exposing secrets and limiting trust remain critical.

**Ryan Donovan:** You mentioned using LLMs as a judge for security scanning. How do you ensure the trusted AI itself is trustworthy?

**Greg Foster:** That’s the “who watches the watchman” problem.

The major LLM providers intend to be trustworthy, and if properly prompted, output reasonable advice.

If the AI becomes compromised, that becomes a new challenge altogether, maybe years down the road.

Currently, you can place some trust in reputable companies building AI security tools, validating them through true/false positives and community feedback.

LLMs can often find security bugs better than humans because humans are distracted and less able to read large amounts of code carefully.

**Ryan Donovan:** Do traditional ML techniques or templating still have a role alongside LLMs in security reviews?

**Greg Foster:** Absolutely. LLMs should be additive — layered on top of existing tools like linters, unit tests, incremental rollouts, and human code review.

Treat AI code review like a supercharged, flexible linter that runs fast and catches issues that rules-based tools miss.

Never replace tests or humans, just complement them.

LLMs are also good at helping create tests automatically, lowering barriers for developers to write better test coverage.

**Ryan Donovan:** No one tool can do it all. Linters, static analysis, and AI all have their places.

**Greg Foster:** Exactly. I see AI as a gift — a modern oracle that we can layer onto our existing processes.

**Ryan Donovan:** Some worry we might outsource our thinking or security expertise to AI bots. Do you share that fear?

**Greg Foster:** Not really. Much of security engineering involves manual auditing, cross-team communication, and pen testing, which AI can assist but not replace.

AI can be a great assistant in searching logs and surfacing information quickly during an incident, but human judgment and process remain critical.

**Ryan Donovan:** We see continued evolution in software engineering complexity. AI is another abstraction layer, but assembly coding and fundamentals remain.

**Greg Foster:** The engineer’s core job is problem-solving, communication, and solution execution — tools change, but the essence remains.

I foresee AI removing busy work and allowing engineers to focus on higher-level challenges.

**Ryan Donovan:** Like graphics programming moved from per-pixel operations to complex math behind the scenes.

**Greg Foster:** Exactly. It’s an abstraction shift, one we’ve seen many times. After a long quiet period, it feels like tech is exciting again with AI.

**Ryan Donovan:** Everyone goes through hype cycles, but the real question is usefulness. Do you use AI daily?

**Greg Foster:** Yes, I run ChatGPT queries, use Claude code — it’s genuinely useful. I don’t focus on productivity metrics as much as on whether it helps engineers solve problems.

**Ryan Donovan:** What new useful ways do you see AI being incorporated into tooling?

**Greg Foster:** Three buckets:

1. **Code generation:** Tab completion to agent chatbots to background agents that create PRs without direct human coding.

2. **Code review:** LLMs scan diffs to find bugs and security issues, assisting human reviewers.

3. **Interaction:** Allowing engineers to query, explore, and tweak code changes interactively via AI as a pair-programming assistant.

The unification of these patterns leads to proactive, background agents that can split PRs, add tests, and improve code iteratively.

At the same time, core processes like CI, builds, merges, and deployments remain stable and essential.

AI amplifies code gen and review, underscoring the importance of fundamentals — clean code, small changes, solid architecture, feature flags, and rollbacks.

Senior engineers benefit most by combining AI tooling with these best practices.

**Ryan Donovan:** It’s time for our shoutout!

Today we congratulate **Xerad**, who earned an Investor badge by dropping a bounty on the Stack Overflow question:

*How to specify x64 emulation flag (EC_CODE) for shared memory sections for ARM64 Windows?*

If interested, check the linked answer in the show notes.

I’m Ryan Donovan, editor of the blog and host of the podcast here at Stack Overflow. If you have questions or feedback, email me at [email protected] or find me on LinkedIn.

**Greg Foster:** Thank you so much for having me. I’m Greg, co-founder and CTO at Graphite. If you’re interested in modern code review, stacking your code changes, or applying AI across that process, check out graphite.dev or follow us on Twitter.

—

*End of transcript.*

https://stackoverflow.blog/2025/11/04/to-write-secure-code-be-less-gullible-than-your-ai/

You may also like

延伸阅读

You may be interested

Globe bets on prepaid fiber, sets expansion

No content was provided to convert. Please provide the text...

Bragging rights up as Samal makes 5150 debut

A stellar Open division field will be shooting for the...

DigiPlus launches P1-M surety bond program

MANILA, Philippines — DigiPlus Interactive Corp. has partnered with Philippine...

The New York Times

The New York Times

- Two Missouri Deputies Are Killed After Traffic Stop in Christian County 2026 年 2 月 24 日 Billy Witz

- Ukraine Battlefield Dead Could Reach 500,000 in Fifth Year, Estimates Suggest 2026 年 2 月 24 日 Paul Sonne and Constant Méheut

- Snowball Fight in New York Turns Chaotic After Police Arrive 2026 年 2 月 24 日 Maia Coleman

- D.O.J. Sues U.C.L.A. After It Refused to Pay $1 Billion Fine 2026 年 2 月 24 日 Alan Blinder and Anemona Hartocollis

- Trump’s New Tariffs Could Face Legal Challenges 2026 年 2 月 24 日 Ana Swanson and Tony Romm

- Home Depot Says Homeowners Are Weary From Economic Pressures 2026 年 2 月 24 日 Kim Bhasin

- Is It Safe to Travel to Mexico Right Now, Given the Cartel Violence? 2026 年 2 月 24 日 Shannon Sims

- Racing to Catch Up With Nvidia, AMD Signs Chips-for-Stock Deal With Meta 2026 年 2 月 24 日 Tripp Mickle and Adam Satariano

- 15 States Sue H.H.S. Over Revisions to Vaccine Schedule 2026 年 2 月 24 日 Apoorva Mandavilli

- Trump Leans on Congress to Address His False Claims of Voter Fraud 2026 年 2 月 24 日 Michael Gold

Leave a Reply