Researchers Introduce ACE, a Framework for Self-Improving LLM Contexts

Researchers from Stanford University, SambaNova Systems, and UC Berkeley have introduced a novel framework called Agentic Context Engineering (ACE), designed to enhance large language models (LLMs) through evolving, structured contexts instead of traditional weight updates. This innovative approach aims to make language models self-improving without the need for retraining.

LLM-based systems typically rely on prompt or context optimization to boost reasoning capabilities and overall performance. Existing techniques like GEPA and Dynamic Cheatsheet have shown improvements; however, they often focus on brevity. This emphasis can cause “context collapse,” a problem where essential details are lost after repeated rewriting.

ACE addresses this issue by treating contexts as evolving playbooks that develop over time. It does so through a modular process involving generation, reflection, and curation. The framework is composed of three main components:

– **Generator:** Produces reasoning traces and outputs.

– **Reflector:** Analyzes successes and failures to extract valuable lessons.

– **Curator:** Integrates those lessons as incremental updates into the context.

To manage the expansion and avoid redundancy, ACE employs a “grow-and-refine” mechanism that merges or prunes context items based on their semantic similarity.

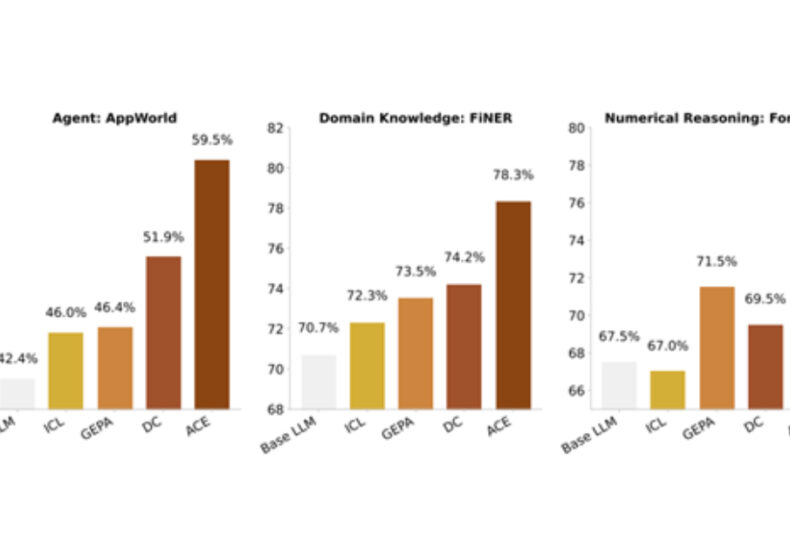

In evaluations, ACE demonstrated significant performance improvements across both agentic and domain-specific tasks. On the AppWorld benchmark for LLM agents, ACE achieved an average accuracy of 59.5%, outperforming previous methods by 10.6 percentage points. This performance matched the top public leaderboard entry—a GPT-4.1-based agent from IBM.

Furthermore, on financial reasoning datasets such as FNER and Formula, ACE delivered an average gain of 8.6%, showcasing even stronger results when ground-truth feedback was available. The researchers also reported that ACE reduced adaptation latency by up to 86.9% and cut computational rollouts by more than 75% compared to established baselines like GEPA.

One notable advantage of the ACE framework is its ability to enable models to “learn” continuously through context updates while preserving interpretability. This feature is particularly beneficial for sectors where transparency and selective unlearning are critical, such as finance and healthcare.

The community response has been optimistic. For instance, a Reddit user commented:

*”That is certainly encouraging. This looks like a smarter way to context engineer. If you combine it with post-processing and the other ‘low-hanging fruit’ of model development, I am sure we will see far more affordable gains.”*

In summary, ACE demonstrates that scalable self-improvement in large language models can be achieved through structured, evolving contexts. This presents a promising alternative to continual learning that avoids the high costs and complexities associated with retraining.

https://www.infoq.com/news/2025/10/agentic-context-eng/?utm_campaign=infoq_content&utm_source=infoq&utm_medium=feed&utm_term=global

You may also like

延伸阅读

You may be interested

Globe bets on prepaid fiber, sets expansion

No content was provided to convert. Please provide the text...

Bragging rights up as Samal makes 5150 debut

A stellar Open division field will be shooting for the...

DigiPlus launches P1-M surety bond program

MANILA, Philippines — DigiPlus Interactive Corp. has partnered with Philippine...

The New York Times

The New York Times

- Department of Homeland Security Shuts Down, Though Essential Work Continues 2026 年 2 月 14 日 Madeleine Ngo

- Casey Wasserman Will Sell Entertainment Agency Amid Epstein Files Fallout 2026 年 2 月 14 日 Shawn Hubler, Ben Sisario and Emmanuel Morgan

- New Research Absolves the Woman Blamed for a Dynasty’s Ruin 2026 年 2 月 14 日 Andrew Higgins

- How China Built a Chip Industry, and Why It’s Still Not Enough 2026 年 2 月 14 日 Meaghan Tobin

- ’The Interview’: Gisèle Pelicot Shares Her Story 2026 年 2 月 14 日 Lulu Garcia-Navarro

- Ramping Up Election Attacks, Trump Does Not Let Reality Get in His Way 2026 年 2 月 14 日 Katie Rogers

- Consultants Offered Epstein Access to Top N.Y. Democrats if He Donated 2026 年 2 月 14 日 Jay Root and Bianca Pallaro

- ICE Agents Menaced Minnesota Protesters at Their Homes, Filings Say 2026 年 2 月 14 日 Jonah E. Bromwich

- Trump Administration Tells Judge It Will Release Gateway Funding 2026 年 2 月 13 日 Patrick McGeehan

- Florida Couple Arrested After Pickleball Match Turns Into a Brawl 2026 年 2 月 13 日 Neil Vigdor

Leave a Reply